What are brain-machine interfaces, and how do they work?

The simplest brain-machine interface, or at least the one we can use the most readily, is the human hand. We’ve structured pretty much the entirely of computing around the input it’s possible to produce with our hands, and now to a lesser extent with our voices. But hands and voices are limited. Words, whether spoken or typed, are just representations of our real intentions, and the practice of a moving the image of a mouse-pointer within a simulated physical space creates even more abstraction between user and program. Translating our thoughts to computer-style commands, and then physically inputting them, is a slow process that takes time and attention away from the task at hand.

But what if a more direct form of brain-machine interface could widen the information bottleneck by sending commands not through nerves and muscles made of meat, but wires and semi-conductors made of metal? Well, then you’d have one big future path for medicine — and very likely personal computing as well.

There are two basic types of interaction between the brain and a machine: info in, and info out. Info in generally takes the form of an augmented or artificial sensory organ sending its signals directly into the nervous system, like a cochlear or ocular implant. Info out, for instance controlling a bionic arm or a mouse pointer with pure thought, involves reading signals in the nervous system and ferrying them out to a computer system. The most advanced devices, like sensing bionic limbs, incorporate paths running in both directions.

It’s important to draw a distinction between devices that read and/or create neural signals in the brain, and those that create neural signals in the nervous system and then allow the nervous system to naturally ferry those signals to the brain on its own. There are advantages and disadvantages to both approaches.

The understand the difference, take the issue of a mind-controlled prosthetic arm. Early bionic control rigs almost all involved surgically implanting electrodes on the surface of the brain, and using these electrodes to read and record brain activity. By recording the activity associated with all sorts of different thoughts (“Think about moving the mouse pointer up and to the left!”), scientists can teach a computer to recognize different wishes and execute the corresponding command. This can be extremely challenging for neural control technology, since of course our computer command of interest is only a tiny fraction of the overall storm of neural activity ongoing in a whole brain at any given instant.

The understand the difference, take the issue of a mind-controlled prosthetic arm. Early bionic control rigs almost all involved surgically implanting electrodes on the surface of the brain, and using these electrodes to read and record brain activity. By recording the activity associated with all sorts of different thoughts (“Think about moving the mouse pointer up and to the left!”), scientists can teach a computer to recognize different wishes and execute the corresponding command. This can be extremely challenging for neural control technology, since of course our computer command of interest is only a tiny fraction of the overall storm of neural activity ongoing in a whole brain at any given instant.

This computer-identification process is also basically an attempt at reinventing something far, far older than the wheel. Evolution created neural structures that naturally sift through complex, chaotic brain-born instructions and produce relatively simple commands to be ferried on by motor neurons; conversely, we also have structures that naturally turn the signals produced by our sensory organs into our nuanced, subjective experience.

Asking a computer to re-learn this brain-sifting process, it turns out, isn’t always the most efficient way of doing things. Often, we can get the body to keep doing its most difficult jobs for us, making real neural control both easier and more precise.

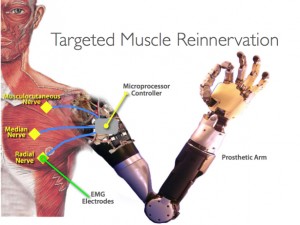

In neural prosthetics, there’s an idea called targeted muscle reinnervation. This allows scientists, in some situations, to preserve a fragment of damaged muscle near the site of amputation and to use this muscle to keep otherwise useless nerves alive. In an amputee these nerves are bound for nowhere, of course, but if kept healthy they will continue to receive signals meant for the missing phantom limb. These signals, as mentioned, have already been distilled out of the larger storm of brain activity, and nicely separated in the motor neuron of the arm, this signal can be read much more easily. And since the user is sending a motor command down precisely the same neural paths as before their amputation, the interaction can be immediately natural and without any meaningful learning curve.

This idea, that we interact with the brain not through the brain itself but through a contact point somewhere else in the nervous system, works just as well for input technology. Most vision prosthetics work by sending signals into the optic nerve, and from there the artificial signals enter the brain just like regular ones. They avoid the difficulty of reliably stimulating only certain neurons in the brain, and again use the brain’s own signal-transduction processes to achieve this aim.

This idea, that we interact with the brain not through the brain itself but through a contact point somewhere else in the nervous system, works just as well for input technology. Most vision prosthetics work by sending signals into the optic nerve, and from there the artificial signals enter the brain just like regular ones. They avoid the difficulty of reliably stimulating only certain neurons in the brain, and again use the brain’s own signal-transduction processes to achieve this aim.

Of course, the strategy of using the nervous system to our benefit is limited by what nature has decided we ought to be able to do. It will probably always be easier and more effective to use pre-separated muscular signals to control muscle-replacement prosthetics, but we have no built-in mouse pointer control nucleus in our brain — at least, not yet. Eventually, if we want to pull from the brain whole complex thoughts or totally novel forms of control, we’re going to have to go to the source.

Direct brain reading and control has made incredible steps forward, from a super-advanced,injectable neuro-mesh to genetically-induced optogenic solutions that can force neurons to fire in response to stimulation with light. Solutions are getting both more invasive and less, diverging into one group with super-high-fidelity by ultimately impractical designs, and one with lower fidelity but more realistic, over-the-scalp solutions. Skullcaps studded with electrodes might not look cool — but you might still pull one on, not too far into the future.

Long term, there’s almost no telling where these trends might take us. Will we end up with enlarged new portions of the motor cortex due to constant use of new pure-software appendages? Will we dictate to our computer in full thoughts? If you’re in a store and spy a sweater your friend might like, could you run it by them simply by remotely sending them the sensory feeling you get as you run your fingers over the fabric? Would this vicarious living be inherently any less worthwhile than having felt the fabric yourself?