That AI study which claims to guess whether you’re gay or straight is flawed and dangerous

Advancements in artificial intelligence can be extremely worrying, especially when there are some pretty serious intimate and privacy issues at stake.

A study from Stanford University, first reported in the Economist, has raised a controversy after claiming AI can deduce whether people are gay or straight by analysing images of a gay person and a straight person side by side.

LGBTQ advocacy groups and privacy organisations have slammed the report as “junk science” and called it “dangerous and flawed” because of a clear lack of representation, racial bias and reducing the sexuality spectrum to a binary.

“Technology cannot identify someone’s sexual orientation,” said Jim Halloran, Chief Digital Officer at GLAAD, the world’s largest LGBTQ media advocacy organization which along with HRC called on Stanford University and the media to debunk the research.

“What their technology can recognize is a pattern that found a small subset of out white gay and lesbian people on dating sites who look similar. Those two findings should not be conflated.”

Kosinski and Wang have responded to HRC and GLAAD’s press release accusing them in turn of “premature judgement”:

Our findings could be wrong. In fact, despite evidence to the contrary, we hope that we are wrong. However, scientific findings can only be debunked by scientific data and replication, not by well-meaning lawyers and communication officers lacking scientific training.

If our findings are wrong, we merely raised a false alarm. However, if our results are correct, GLAAD and HRC representatives’ knee-jerk dismissal of the scientific findings puts at risk the very people for whom their organizations strive to advocate.

The study

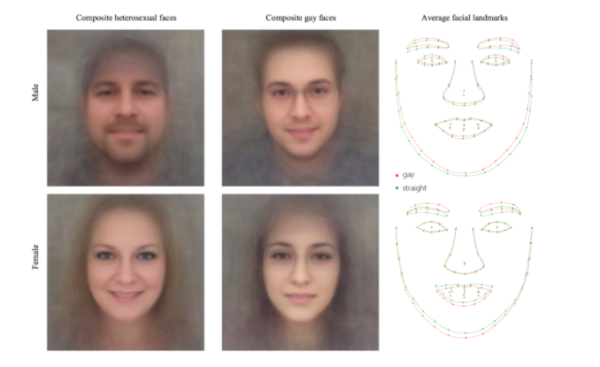

The research, by Michal Kosinski and Yilun Wang, was conducted on a publicly available sample of 35,326 pictures of 14,776 people from a popular American dating website.

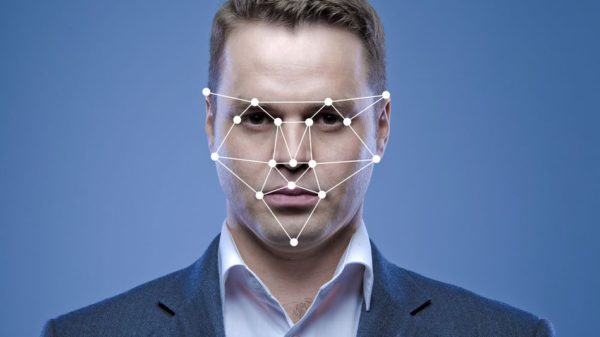

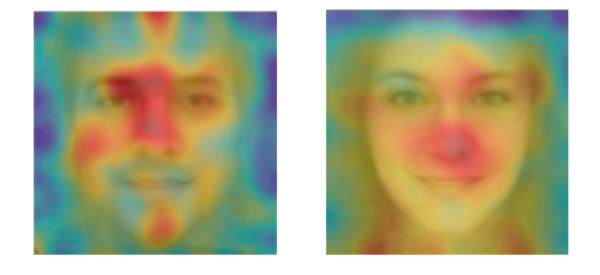

Using “deep neural networks” and facial-detection technology, Kosinski and Wang trained an algorithm to detect subtle differences in the images’ fixed and transient facial features.

When presented with a pair of participants, one gay and one straight, both chosen randomly, the model could correctly distinguish between them 81% of the time for men and 74% of the time for women.

The percentage rises to 91% for men and 83% for women when the software reviewed five images per person.

In both cases, it far outperformed human judges, who were able to make an accurate guess only 61% of the time for men and 54% for women.

Kosinski and Wang based their model on the prenatal hormone theory (PHT).

Representation, labelling and racial bias

Besides the ethical issue of mining of data from public dating websites, the study immediately raises questions of representation and labelling.

First of all, the report didn’t look at any non-white individuals, it assumed there were only two sexual orientations — gay and straight — and does not address bisexual individuals.

One can naturally see how that’s hardly representative of the LGBTQ community.

“Everyone involved was under 40, living in America, and willing to publicly put themselves (and their preferences) on a website,” Michael Cook, an AI researcher at the University of Falmouth working in games, generative systems, and computational creativity, tells Mashable.

“That group of people is probably very different to people in different age groups, in different countries, in different romantic situations.”

Another problem is about labelling, according to Cook. Does the data actually label what we think it labels?

“The data grouped people based on whether they said they were looking for ‘men’ or ‘women’ on the dating app,” he says.

“So it greatly simplifies the gender and sexuality spectrum that people are on in real life, and it also means it erases information like bisexuality, asexuality, and people who are still unsure of, or closeted in, their sexual preferences.”

Dana Polatin-Reuben, technology officer at the advocacy group Privacy International, goes even further, saying the research is “dangerous” as its perceived veracity “threatens the rights and, in many cases, lives of LGBT people living in repression.”

“The research design makes implicit assumptions about the rigidity of the sexual and gender binary, given that those with a non-binary gender identity or sexual orientation were excluded,” Polatin-Reuben says.

Then, there’s the issue of racial bias. A lot of AI researchers are white and a lot of photographic datasets tend to also be full of white faces, Cook and Polatin-Reuben agree.

Researchers then tend to draw conclusions and train systems only on those faces, and the study “often doesn’t transfer at all to people whose appearances may be different,” Cook says.

“By only including photos of white people, the research is not only not universally applicable, but also completely overlooks who will face the gravest threat from this application of facial recognition, as LGBT people living under repressive regimes are most likely to be people of colour,” Polatin-Reuben adds.

stanford university” data-credit-provider=”custom type” data-fragment=”m!cdfb” data-image=”https://i.amz.mshcdn.com/JIwuTONt7Ph9_THrwRI78CkVhDo=/https%3A%2F%2Fblueprint-api-production.s3.amazonaws.com%2Fuploads%2Fcard%2Fimage%2F586344%2F97a9ec4b-06c3-4a75-aa2a-352bcb21560e.png” data-micro=”1″ data-mce-src=”https://i.amz.mshcdn.com/KSmne2VCdLIMgdLkVkGmmJ8yy84=/fit-in/1200×9600/https%3A%2F%2Fblueprint-api-production.s3.amazonaws.com%2Fuploads%2Fcard%2Fimage%2F586344%2F97a9ec4b-06c3-4a75-aa2a-352bcb21560e.png” data-mce-style=”max-width: 100%; vertical-align: middle; border: 0px; width: 625px;”>

stanford university” data-credit-provider=”custom type” data-fragment=”m!cdfb” data-image=”https://i.amz.mshcdn.com/JIwuTONt7Ph9_THrwRI78CkVhDo=/https%3A%2F%2Fblueprint-api-production.s3.amazonaws.com%2Fuploads%2Fcard%2Fimage%2F586344%2F97a9ec4b-06c3-4a75-aa2a-352bcb21560e.png” data-micro=”1″ data-mce-src=”https://i.amz.mshcdn.com/KSmne2VCdLIMgdLkVkGmmJ8yy84=/fit-in/1200×9600/https%3A%2F%2Fblueprint-api-production.s3.amazonaws.com%2Fuploads%2Fcard%2Fimage%2F586344%2F97a9ec4b-06c3-4a75-aa2a-352bcb21560e.png” data-mce-style=”max-width: 100%; vertical-align: middle; border: 0px; width: 625px;”>Kosinski and Wang acknowledged some of the study’s limitations.

For example, they pointed out the high accuracy rate doesn’t mean that 91% of the gay men in a given population can be identified, as it only applies when one of the two images presented is known to belong to a gay person.

Naturally, in the real world the accuracy rate would be much lower, as a simulation of a sample of 1,000 men with at least five photographs showed.

In that case, the system selected the 100 males most likely to be gay but only 47 of those actually were.

GLAAD’s Halloran said the research “isn’t science or news, but it’s a description of beauty standards on dating sites that ignores huge segments of the LGBTQ community, including people of color, transgender people, older individuals, and other LGBTQ people who don’t want to post photos on dating sites.”

Privacy issues

Kosinski and Wang said they were so disturbed by the results that they spent a lot of time considering whether “they should be made public at all.”

But they stressed their findings have “serious privacy implications” as with millions of facial pictures publicly available on Facebook, Instagram, and other social media, everyone can virtually go on a sexual detection spree without the individuals’ consent.

“We did not want to enable the very risks that we are warning against,” they said.

However, that’s exactly what they did according to HRC.

“Imagine for a moment the potential consequences if this flawed research were used to support a brutal regime’s efforts to identify and/or persecute people they believed to be gay,” HRC Director of Public Education and Research Ashland Johnson, said.

The researchers kind of preventively counter-argued in the study that governments and corporations are already using such tools, and said they wanted to warn policymakers and LGBTQ communities about the huge risks they’re facing if this technology falls in the wrong hands.

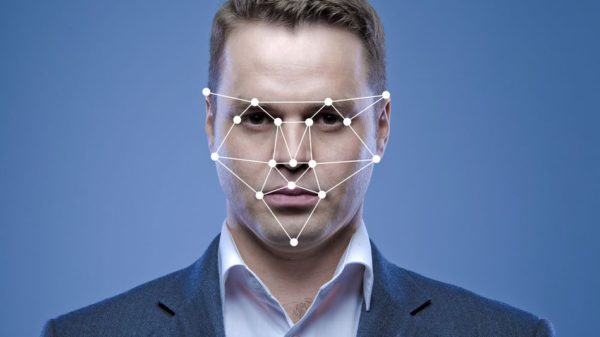

Facial images of billions of people are stockpiled in digital and traditional archives, including dating platforms, photo-sharing websites, and government databases.

Profile pictures on Facebook, LinkedIn, and Google Plus are public by default. CCTV cameras and smartphones can be used to take pictures of others’ faces without their permission.

According to Cook, this is actually a key point as the main point with these papers, more than verifying whether they’re accurate or not, is whether people will actually use them.

“If businesses wanted to use this to refuse service to gay people, it doesn’t actually matter if the system works or not — it’s still wrong and terrifying,” Cook said.

“The thing that makes this technology really scary is that AI and computers have an aura of trustworthiness about them — it feels scientific and acceptable to do something hateful through a computer.”

Glaad and the HRC said they spoke with Stanford University months before the study’s publication — but there was no follow-up on their concerns.

They concluded: “Based on this information, media headlines that claim AI can tell if someone is gay by looking one photo of your face are factually inaccurate.”