Don’t Expect Any New Apple Intelligence Features in iOS 19

Recent rumors suggest that Apple will have some significant design changes to show off when it unveils the next version of its iPhone operating system at this year’s Worldwide Developers Conference (WWDC) in June. However, it’s unlikely that we’ll see any AI features among them.

That probably shouldn’t be a big surprise, considering Apple has yet to deliver all of the Apple Intelligence features it promised at last year’s WWDC. While we’ve gotten most of the big ones, including Writing Tools, Notification Summaries, ChatGPT integration, Image Playground, and Genmoji, the one that was perhaps the most exciting — a much improved Siri — won’t be coming to the iOS 18 family of updates.

There are indications that Apple originally had these Siri improvements on the roadmap for iOS 18.4, which is now due to be released in April. However, a mid-February report revealed that it would miss that release, and last week Apple dashed our hopes for even an iOS 18.5 debut with an official statement placing its rollout “in the coming year” rather than over the next few months.

This lines up with multiple reports that suggest Apple has been having trouble getting its more personally aware Siri working to its satisfaction. On Friday, it pulled the Bella Ramsey ad promoting the feature, and this week it’s been gradually scrubbing its website of references to the personal context aspect of the Siri improvements.

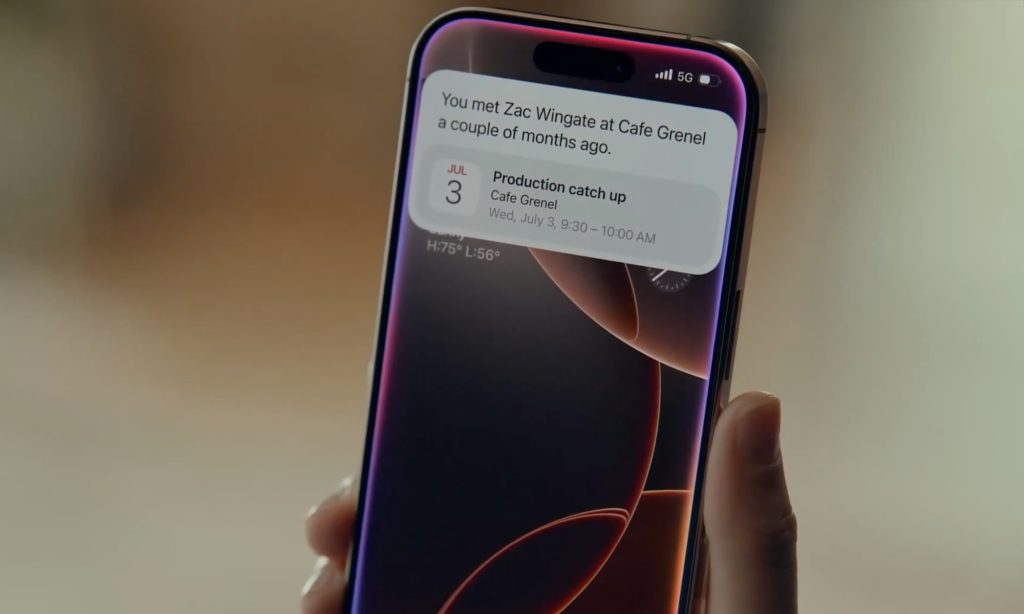

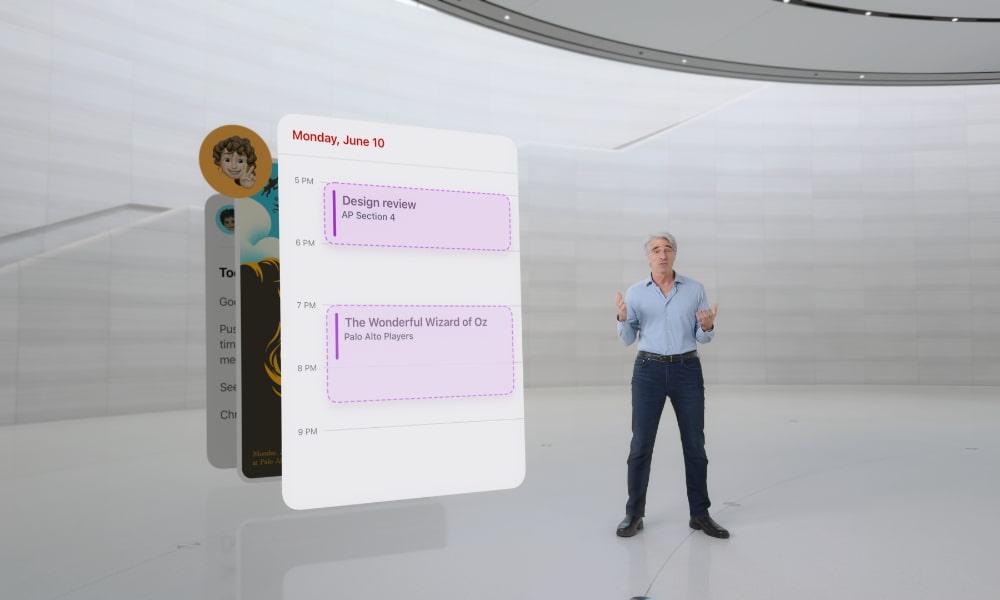

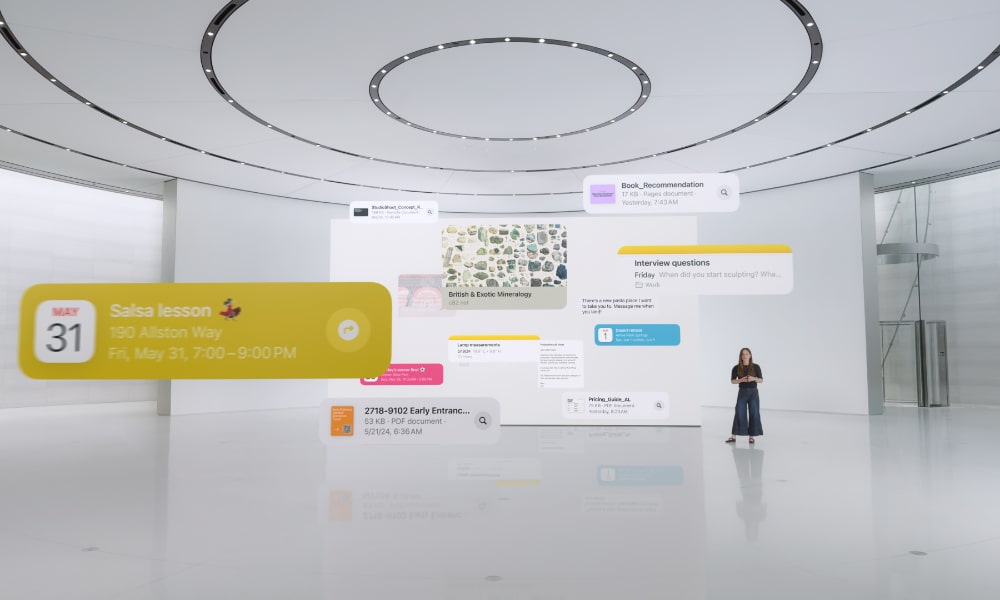

In its June 2024 WWDC presentation, Apple promised AI improvements that would allow Siri to work more like a human assistant, understanding context and digging through your apps to ferret out relevant information. Examples given at the time included finding out details on a family member’s arrival time by simply asking “What time does my mom’s flight land” or asking Siri if one could make it to their child’s school play after their last meeting. In both cases, Siri would do the same type of work a human assistant would, checking calendar appointments, emails, notes, PDF invites in Messages and Mail, locations and travel times in Apple Maps, and flight tracking information on the web. It would distill this all into a simplified answer.

Like most tech demos, what Apple showed was impressive, but in retrospect, it’s unlikely that Siri had these capabilities last June; what we saw instead was a heavily scripted sequence of events.

While Apple’s challenges with Siri might seem unusual compared to powerful chatbots like OpenAI’s ChatGPT and Google’s Gemini, there’s an extra dimension to adding this kind of personal context that these other AI assistants don’t need to face. Apple needs to do this reliably — a voice assistant that can’t reliably provide accurate information is worse than none at all — and Apple has to do it securely, since it’s accessing your personal information. Apple doesn’t want to risk exposing that even to its own cloud servers.

The privacy and security aspect may be one of the most significant challenges that Apple is facing here. Developer Simon Willison shared some insights over the weekend, explaining that he had a “hunch that this delay might relate to security.” Willison notes that Apple’s goals to have Siri access private data in apps makes it very vulnerable to “prompt injection attacks” — a flaw that’s present in all large language models (LLMs) that can allow malicious instructions to be inserted into the pipeline that could convince the AI bot to give up that private data to an attacker.

This is the worst possible combination for prompt injection attacks! Any time an LLM-based system has access to private data, tools it can call, and exposure to potentially malicious instructions (like emails and text messages from untrusted strangers) there’s a significant risk that an attacker might subvert those tools and use them to damage or exfiltrating a user’s data.

Simon Willison

Given Apple’s focus on privacy, this is a problem it needs to solve before giving a more advanced Siri unfettered access to read and analyze all our emails and messages.

Prompt injection is a challenge for all LLMs, but it’s far less of an issue for bots like ChatGPT with little to no access to personal information. There are currently ways for software engineers to mitigate these risks, but none have discovered a way to eliminate the dangers entirely. Getting this as secure as it needs to be will be a very tall order for Apple, so it makes sense that this will be its only major AI focus for iOS 19.

That doesn’t mean we won’t see an expansion of existing Apple Intelligence features in iOS 19. “Apple is unlikely to unveil groundbreaking new AI features,” in iOS 19, Bloomberg’s Mark Gurman said earlier this week. “Instead, it will likely lay out plans for bringing current capabilities to more apps.”

While Writing Tools are already quite pervasive, available nearly everywhere you can enter text into an app, there’s room for other tools like Image Playground and Genmoji to find their way into other apps. For instance, Image Playground could be integrated into Contacts and Music to generate avatars for people and playlist artwork for albums. Apple could also enhance its Journal app with more AI features to help prompt and inspire new entries. Those will be small improvements, but they’ll help extend the current suite of Apple Intelligence features and make them more useful while Apple plays catch-up on Siri and the other things it has planned.