Controversial Apple Child Protection Feature Rolls Out to More Countries | What Is It and Why Does It Matter?

Credit: Apple

Credit: Apple

The new expansion was first reported by The Guardian, which noted that it was coming to users in the U.K.; however, Apple has since confirmed that it’s also rolling out in Canada, Australia, and New Zealand.

While The Guardian didn’t offer a timeline, the feature appears to be available already in Canada, at least in the latest iOS 15.5 betas. It’s unclear whether the rollout will require the newer version of iOS 15; Apple has yet to update its support document to reflect the wider rollout, but in the U.S., the feature only requires iOS 15.2 or later.

The feature remains largely unchanged in these other countries from last year’s original U.S. release. The only significant difference is that links to “Child Safety Resources” point to websites specific to each country. For example, in Canada, it will take parents and kids to the appropriate pages on ProtectKidsOnline.ca and NeedHelpNow.ca, both of which are run by the Canadian Centre for Child Protection.

These Incredible Apps Help You Save Money, Earn Cash, Cancel Unwanted Subscriptions, and Much More

The App Store has become completely oversaturated with all the same repetitive junk. Cut out the clutter: These are the only 6 iPhone apps you’ll ever need…Find Out More

Last summer, Apple announced three new initiatives related to online child safety. The most controversial of these would have involved scanning all photos uploaded to iCloud by any user for possible Child Sexual Abuse Material, or CSAM.

However, that’s not what Communication Safety in Messages is at all. This opt-in feature is specifically designed to protect kids from being harassed by unwelcome and inappropriate images in the Messages app.

Unfortunately, Apple admitted it screwed up by releasing too many things at the same time, leading many users to confusion about the features. Apple quickly delayed its plans, announcing that it would take time to get more feedback from privacy and child safety advocates before rolling out any of the new features.

When Communication Safety in Messages finally arrived in iOS 15.2, it was clear that Apple had listened to that feedback, as it made one fundamental change from what was initially proposed.

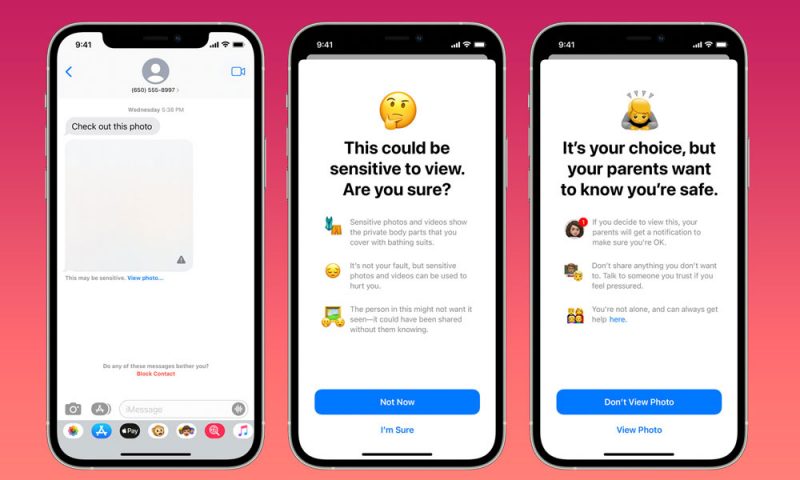

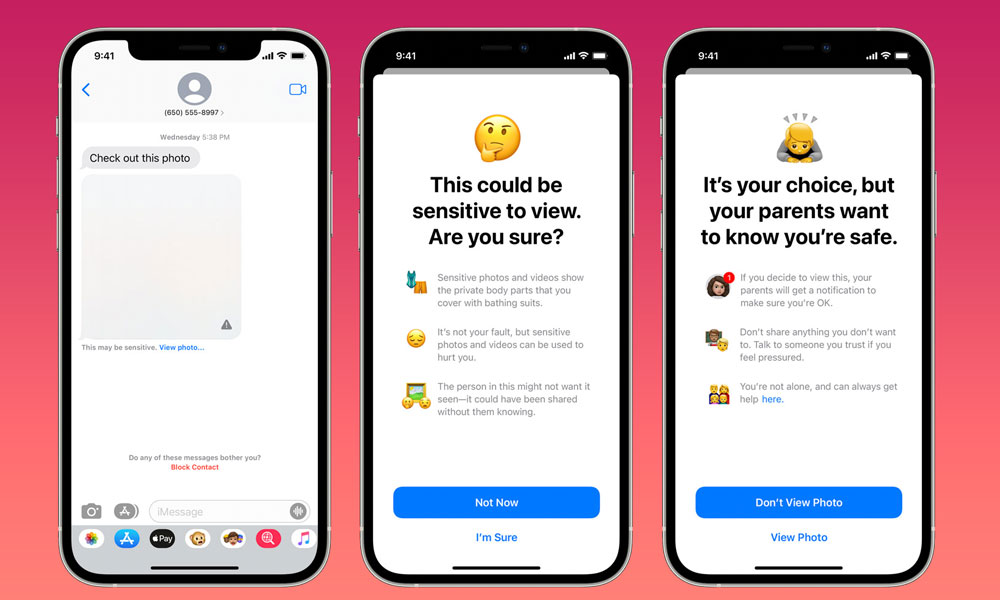

- At its core, the new communication safety feature will use on-device machine learning to detect and blur out any images sent to or received by children that are identified as containing sexually explicit material or nudity.

- Kids will see a warning telling them that they should think twice about viewing or sending the image in question.

- They can choose to override that warning and view the image anyway, but as part of that process, they will receive additional guidance and links to online resources where they can get help.

- However, under no circumstances will parents be notified unless the child chooses to do so.

This last point is what’s changed from Apple’s original design, which would have automatically sent notifications to the parents of kids under 13 years of age. Those younger kids would have still been warned that choosing to view the image would notify their parents, but if they decided to view it anyway, the notification would be sent and there’s be nothing they could do to stop it.

However, child safety advocates made the very valid point that this could put kids at risk. For example, young children who are queer or questioning or even just allies could get outed and abused by intolerant parents.

As a result, Apple wisely chose to eliminate this part of the Communication Safety in Messages feature. As it stands now, the system works the same whether a child is 5 or 15: inappropriate images will be blocked, advice will be given, but the child will still be permitted to override the system and view (or send) the picture anyway.

Even aside from the abuse ramifications, this change is a good idea. Technology should never be a substitute for good parenting.

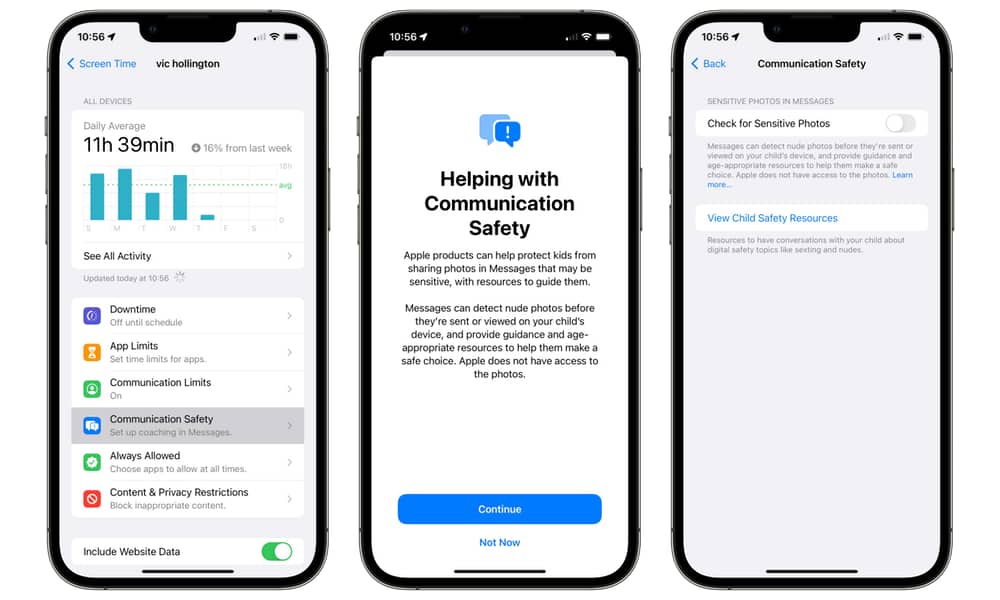

It’s also worth mentioning that this system is not automatically enabled. Parents must opt-in on behalf of their kids, and you won’t even see the feature unless you’re part of an Apple Family Sharing Group that includes children under the age of 18.

If you’re a parent who fits into this category, you can opt into the feature under the normal Screen Time settings, where you’ll see a new Communication Safety option for each of your kids’ screen time profiles.

Contrary to much of the negative hype from last year, Apple never intended to scan all of the photos on your iPhone. Even the much more potentially invasive CSAM detection feature would have only scanned photos that were being uploaded to iCloud. This would have been done on your iPhone before that upload occurred, but it still would have been exclusive to images that were about to be stored on Apple’s servers.

In fact, Apple has been eerily quiet about the CSAM feature — to the point where many suspect it may just let it die of natural causes. A more cynical take is that Apple could choose to release it behind the scenes more quietly; however, it’s also fair to say that Apple isn’t that stupid — the company would get caught, and when it did, the negative publicity would undoubtedly cause tangible repercussions, not the least of which would be its stock taking a nosedive.

Again, though, that’s not what the communication safety feature is. It’s extremely limited in scope.

- It does nothing unless you enable it.

- Even if it’s enabled, it doesn’t scan or analyze any photos for adult users.

- When enabled for a child who is part of a Family Sharing group, only photos sent and received in the Messages app are scanned.

- Nothing is scanned in the Photos app, iCloud Photo Library, or any other app on the child’s device.

- All analysis occurs entirely on the child’s device; no information is sent to Apple’s servers.

- Nobody other than the child is notified when a suspicious image is detected.

- Parents can turn the feature off at any time.

- It’s automatically disabled for a child on their 18th birthday.

Of course, since this only works in Apple’s Messages app, it’s also ridiculously easy for a child to bypass unless their parent locks them down out of other messaging platforms. Apple will not analyze photos or videos on Snapchat, Instagram, Facebook Messenger, TikTok, Discord, or any other third-party app that a child uses.