Apple Rolling Out Communication Safety Feature for Children to Six New Countries

Credit: Apple

Credit: Apple

According to a newly published report by iCulture, Apple will soon expand its iOS Communications Safety feature to six more countries. The feature will be rolling out to the Netherlands, Belgium, Sweden, Japan, South Korea, and Brazil.

Communications Safety is currently available in the United States, the United Kingdom, Canada, New Zealand, and Australia. This feature was included in the macOS 12.1, iOS 15.2, and iPadOS 15.2 updates and requires accounts to be set up as “families” in iCloud.

It’s a privacy-focused, opt-in feature that must be enabled for the child accounts in the parents’ Family Sharing plan. All image detection is handled directly on the device, with no data ever being sent from the iPhone.

The feature was initially rolled out as a part of iOS 15.2 in the United States and is now a feature of iMessage. The feature examines a user’s inbound and outbound messages for nudity on devices used by children.

6 Apps Everyone Should Absolutely Have on Their iPhone & iPad – Number 1 is Our Favorite

The App Store has become completely oversaturated with all the same repetitive junk. Cut out the clutter! These are the only 6 iPhone apps you’ll ever need…Find Out More

| Sponsored Content |

How Does it Work?

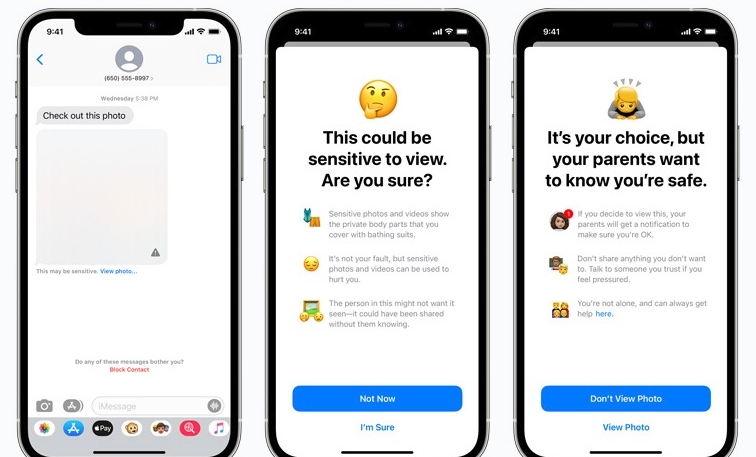

When content containing nudity is received, the photo is automatically blurred and a warning will be presented to the child, which includes helpful resources, and assures them that it is okay to not view the photo. The child can also be warned that to ensure their safety, parents will receive a message if they do choose to view it.

Similar warnings will be available if underage users attempt to send sexually explicit photos. The minor will be warned before the photo is sent, and parents will also receive a message if the child chooses to send the image.

In both cases, the child will be presented with the option to message someone they trust to ask for help if they choose to do so.

The system will analyze image attachments and determine whether or not a photo contains nudity. End-to-end encryption of the messages is maintained during the process. No indication of the detection leaves the device. Apple does not see the messages, and no notifications are sent to the parent or anyone else if the image is not opened or sent.

Apple’s Additions and Reaction to Previous Concerns

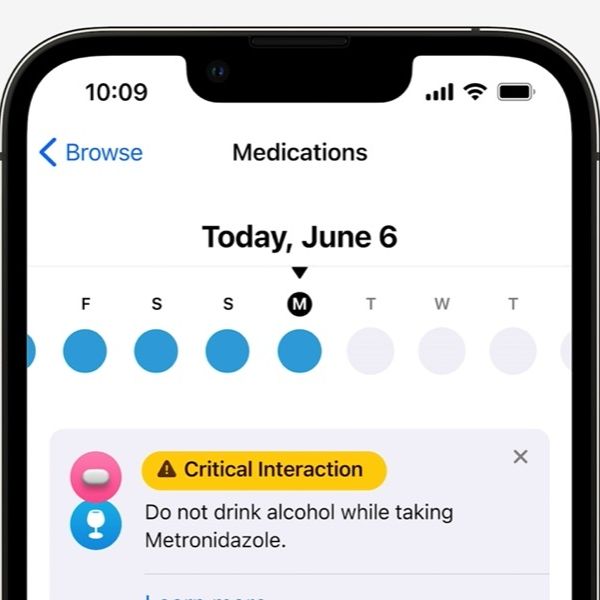

Apple has also provided additional resources in Siri, Spotlight, and Safari Search to help children and parents stay safe online and to assist with unsafe situations. For instance, users that ask Siri how they can report child exploitation will be told how and where they can file a report.

Siri, Spotlight, and Safari Search have also been updated to handle when a user performs a query related to child exploitation. Users will be told that interest in these topics is problematic and will provide resources to get help for this issue.

In December, Apple quietly abandoned its plans to detect Child Sexual Abuse Material (CSAM) in iCloud Photos, following criticism from policy groups, security researchers, and politicians over concerns over the possibility of false positives, as well as possible “backdoors” that would allow governments or law enforcement to monitor users activity by also scanning for other types of images. Critics also claimed that the feature was less than effective in identifying actual child sexual abuse images.

Apple said the decision to abandon the feature was “based on feedback from customers, advocacy groups, researchers and others… we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

For more information, visit Apple’s Child Safety website.

An earlier version of this article was published by Mactrast.