Experts are worried that a South Korean university could trigger the robot apocalypse

More than fifty of the world’s leading experts in artificial intelligence have come together to try and halt the actions of a South Korean university that they believe could basically be building Skynet.

They’re not using such layman’s terms as “Skynet”, “Terminator” or “Did you call moi a dip-shit?” though. What they’re concerned about is a joint research centre being opened by the Korea Advanced Institute of Science and Technology (KAIST) and Hanwha Systems.

Hanwha make some really – to use a boffin’s term – terrifying shit, including cluster munitions, which are banned pretty much everywhere under the Convention on Cluster Munitions. (Excluded in “pretty much everywhere”: China, Russia and the USA – the US said that cluster bombs were legal, had a “clear military utility in combat” and weren’t as bad as other, unspecified, weapons.)

This new venture between KAIST and Hanwha, with the aim of developing artificial intelligence (AI) technologies to be applied to military weapons, has brought together AI experts from all over the world, opposed to what they see as a dangerous step on the journey towards entirely autonomous killing machines.

“We can see prototypes of autonomous weapons under development today by many nations including the US, China, Russia, and the UK,” says the open letter, written by Professor Toby Walsh of the University of New South Wales but co-signed by over 50 experts from over 30 different countries. “We are locked into an arms race that no one wants to happen. KAIST’s actions will only accelerate this arms race. We cannot tolerate this. If developed, autonomous weapons will […] permit war to be fought faster and at a scale greater than ever before. This Pandora’s box will be hard to close if it is opened.”

KAIST has an impressive pedigree in robotics, winning the DARPA Robotics Challenge in 2015. Hanwha is one of South Korea’s biggest conglomerates, and awesomely was founded under the name Korea Explosives Co.

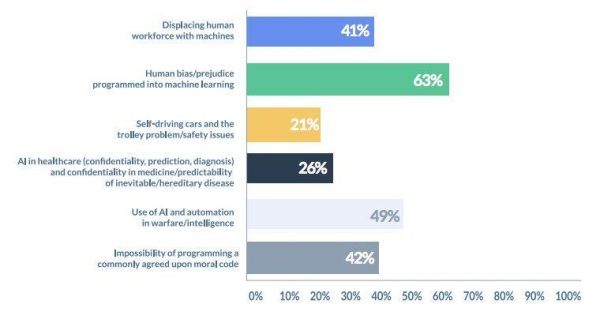

AI in warfare is a massive global concern. Obviously there are worries about killing machines turning on humanity itself, but there are additional ones – the abstraction of responsibility it creates is thought to make killing easier in general, as well as essentially remove all consequences for unlawful killings, the breaking of international treaties and the like.

In June, there is going to be a massive summit in Geneva on AI For Good, looking to use this technology to look at things like sustainability and combating humanitarian crises rather than killing people. Plus, if an autonomous bean-planting robot goes on a rampage, we just end up with loads of beans rather than a lifeless irradiated post-apocalyptic wasteland, and that is to be applauded.