Google and Microsoft Are in a Race to Ruin Search With AI

It’s been a rough year for the tech industry—investors are skittish, layoffs are dominating the news, and a pesky tool called ChatGPT threatens to destroy search engines. So, ironically, Google and Microsoft are responding to this threat by racing to ruin their own search engines.

Let’s start at the top; ChatGPT is not a reliable source for any information. It’s an interesting tool, it can fulfill several tasks, but it isn’t intelligent and lacks any sort of comprehension or knowledge. Unfortunately, chatbots like ChatGPT can feel very authoritative, so a ton of people (including journalists, investors, and workers within the tech sector) mistakenly believe that it’s knowledgeable and accurate.

Now, you could say that tools like Google Search are unintelligent, and you’d be right. You could also criticize the effectiveness of Google Search or Bing—these services are gamified by websites, and as a result, they bring a ton of garbage to the surface of the internet. But unlike ChatGPT, Google doesn’t answer questions. It just links you to websites, leaving you to figure out if something is right or wrong. It’s an aggregate tool, rather than an authority.

But the hype machine is sending ChatGPT into the stratosphere, and big tech wants to follow along. After tech blogs and random internet personalities hailed ChatGPT as the downfall of Google Search, Microsoft made a big fat investment in the tech and started integrating it with Bing. Our friends at Google responded with Bard, a custom AI chatbot that relies on the experimental LaMDA model.

Microsoft and Google are in a race to see who can rush this technology out the door. Not only will they lend authority to tools like ChatGPT, but they’ll present this unpolished AI to users who don’t know any better. It’s irresponsible, it will amplify misinformation, and it will encourage people to throw research by the wayside.

Not to mention, this technology will force Microsoft and Google to become the arbitrators of what is right or wrong. If we’re going to treat conversational AI as an authority, it needs to be constantly adjusted and moderated to weed out inaccuracies. Who will make those decisions? And how will people respond when their beliefs or their “knowledge” aren’t represented by the fancy AI?

I should also note that these conversational models are built on existing data. When you ask ChatGPT a question, it might respond using information from a book or an article, for example. And when CNET tried testing an AI writer, it published a ton of plagiarized content. The data that drives this tech is a copyright nightmare, and regulation seems like a strong possibility. (If it happens, regulation could dramatically increase the cost of conversational AI development. Licensing all of the content required to build this tech would cost a fortune.)

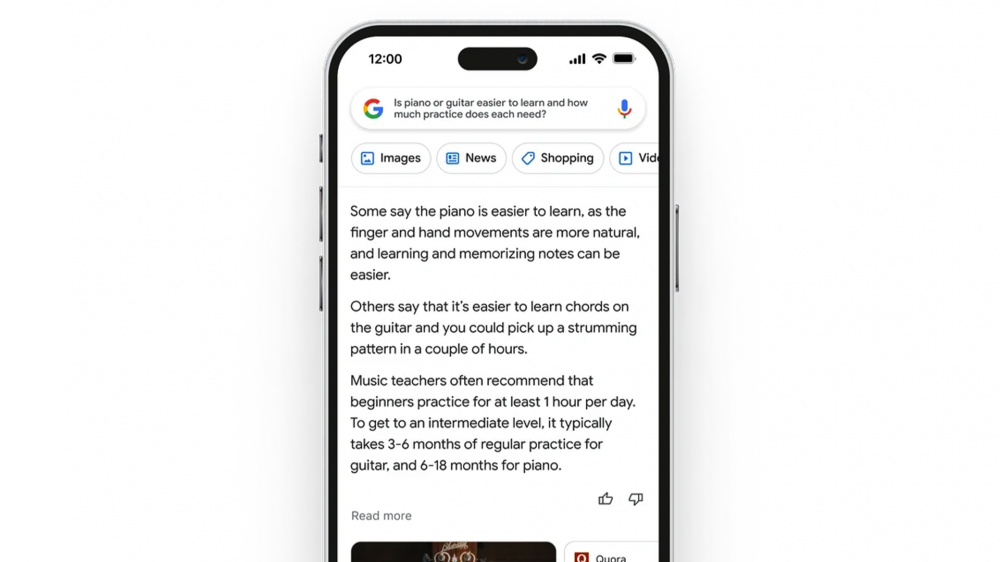

Early glimpses of Google’s Bard show that it may be deeply integrated within Search, presenting information inside of the regular search engine and within a dedicated chat box. The same goes for Bing’s conversational AI. These tools are now being tested and finalized, so we expect to learn more in the coming weeks.

By the way, Google and Microsoft have both published a bunch of AI principles. It may be worth reading these principles to see how the companies are approaching AI development.