To curb identity theft, this AI app warns you when your fingerprints are visible in pictures

You don’t need to be reading conspiracy theories about global elites secretly being shape-shifting reptiles to become deeply paranoid online. In fact, a quick glance at your favorite computer science academic journal (and everyone’s got at least one, right?) will be enough to convince you that, when it comes to keeping yourself safe and secure, you’ve been doing everything all wrong.

There are a plethora of potential identifiers in virtually every picture, from obvious ones like faces which be recognized by machines to ones that sound like they come straight from a James Bond movie, such as extracting fingerprints from high-res photos that show your hands. Fortunately, researchers at Germany’s Max Planck Institute for Informatics are on the case, and in a newly published paper, they reveal their solution: An AI which proactively scans every image you prepare to post and alerts you whether it’s secretly revealing data you really don’t want to share.

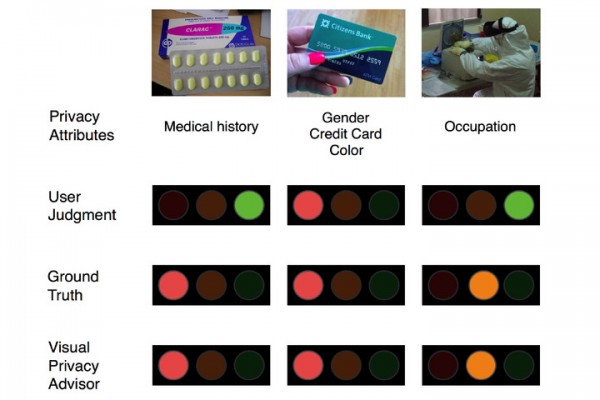

The system they describe would involve a deep learning neural network trained to recognize revealing patterns in large amounts of data, and then to search for those same patterns in subsequent images. For their work, the Max Planck Institute researchers collected thousands of images, and then filtered them into 68 different privacy categories, based on information they’re revealing.

They also conducted surveys into how much data people were happy to share online, and how much they thought particular photos were revealing — thereby highlighting the fact that we, as puny humans, really aren’t that good at parsing this particular task.

The AI would therefore be a weight off users’ mind, as we would be alerted of potential privacy issues without having to scour every picture, Where’s Waldo?-style, for obscure revealing clues.

No, the privacy-minded Siri isn’t ready yet — with a large part of the reason being that some of the technology described isn’t yet up to the necessary performance standards. But with privacy an increasingly big issue, and deep learning advancing at an astonishingly rapid rate, it’s good to know that people are thinking of new ways to keep us safe.

Who knows? In 10 years time, a tool like this could be as ubiquitous as spell check!